Web Scraping using Python with 7 complete projects Complete end to end web scraping tutorial using python, all steps and codes are explained in detail. Rating: 3.2 out of 5 3.2 (87 ratings). Dec 05, 2017 In this tutorial, we will talk about Python web scraping and how to scrape web pages using multiple libraries such as Beautiful Soup, Selenium, and some other magic tools like PhantomJS. You’ll learn how to scrape static web pages, dynamic pages (Ajax loaded content), iframes, get specific HTML elements, how to handle cookies, and much more. Why Use Python For Web Scraping? Python 3 is the best programming language to do web scraping. Python is so fast and easy to do web scraping. Also, most of the tools of web scraping that are present in the Kali-Linux are being designed in Python. Enough of the theories, let’s start scraping the web using the beautiful soup library. In this article I will show you how you can create your own dataset by Web Scraping using Python. Web Scraping means to extract a set of data from web. If you are a programmer, a Data Scientist, Engineer or anyone who works by manipulating the data, the skills of Web Scrapping will help you in your career.

The need for extracting data from websites is increasing. When we are conducting data related projects such as price monitoring, business analytics or news aggregator, we would always need to record the data from websites. However, copying and pasting data line by line has been outdated. In this article, we would teach you how to become an “insider” in extracting data from websites, which is to do web scraping with python.

Step 0: Introduction

Web scraping is a technique that could help us transform HTML unstructured data into structured data in a spreadsheet or database. Besides using python to write codes, accessing website data with API or data extraction tools like Octoparse are other alternative options for web scraping.

For some big websites like Airbnb or Twitter, they would provide API for developers to access their data. API stands for Application Programming Interface, which is the access for two applications to communicate with each other. For most people, API is the most optimal approach to obtain data provided from the website themselves.

However, most websites don’t have API services. Sometimes even if they provide API, the data you could get is not what you want. Therefore, writing a python script to build a web crawler becomes another powerful and flexible solution.

So why should we use python instead of other languages?

- Flexibility: As we know, websites update quickly. Not only the content but also the web structure would change frequently. Python is an easy-to-use language because it is dynamically imputable and highly productive. Therefore, people could change their code easily and keep up with the speed of web updates.

- Powerful: Python has a large collection of mature libraries. For example, requests, beautifulsoup4 could help us fetch URLs and pull out information from web pages. Selenium could help us avoid some anti-scraping techniques by giving web crawlers the ability to mimic human browsing behaviors. In addition, re, numpy and pandas could help us clean and process the data.

Now let's start our trip on web scraping using Python!

Step 1: Import Python library

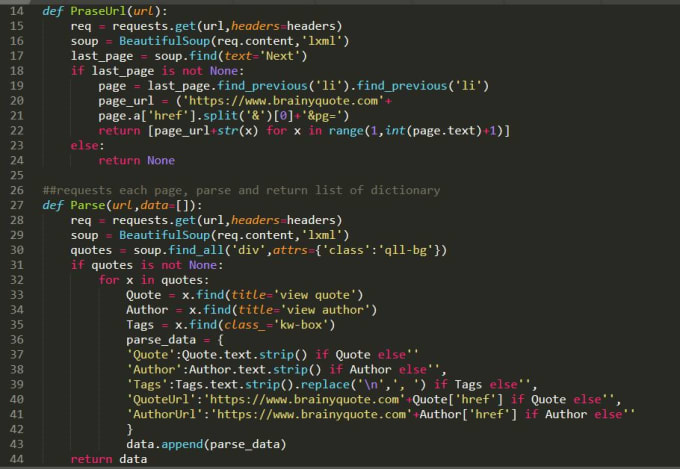

In this tutorial, we would show you how to scrape reviews from Yelp. We will use two libraries: BeautifulSoup in bs4 and request in urllib. These two libraries are commonly used in building a web crawler with Python. The first step is to import these two libraries in Python so that we could use the functions in these libraries.

Step 2: Extract the HTML from web page

We need to extract reviews from “https://www.yelp.com/biz/milk-and-cream-cereal-bar-new-york?osq=Ice+Cream”. So first, let’s save the URL in a variable called URL. Then we could access the content on this webpage and save the HTML in “ourUrl” by using urlopen() function in request.

Then we apply BeautifulSoup to parse the page.

Now that we have the “soup”, which is the raw HTML for this website, we could use a function called prettify() to clean the raw data and print it to see the nested structure of HTML in the “soup”.

Step 3: Locate and scrape the reviews

Next, we should find the HTML reviews on this web page, extract them and store them. For each element in the web page, they would always have a unique HTML “ID”. To check their ID, we would need to INSPECT them on a web page.

After clicking 'Inspect element' (or 'Inspect', depends on different browsers), we could see the HTML of the reviews.

In this case, the reviews are located under the tag called ”p”. So we will first use the function called find_all() to find the parent node of these reviews. And then locate all elements with the tag “p” under the parent node in a loop. After finding all “p” elements, we would store them in an empty list called “review”.

Now we get all the reviews from that page. Let’s see how many reviews have we extracted.

Step 4: Clean the reviews

You must notice that there are still some useless texts such as “<p lang=’en’>” at the beginning of each review, “<br/>” in the middle of the reviews and “</p>” at the end of each review.

“<br/>” stands for a single line break. We don’t need any line break in the reviews so we will need to delete them. Also, “<p lang=’en’>” and “</p>” are the beginning and ending of the HTML and we also need to delete them.

Finally, we successfully get all the clean reviews with less than 20 lines of code.

Here is just a demo to scrape 20 reviews from Yelp. But in real cases, we may need to face a lot of other situations. For example, we will need steps like pagination to go to other pages and extract the rest reviews for this shop. Or we will also need to scrape down other information like reviewer name, reviewer location, review time, rating, check-in......

To implement the above operation and get more data, we would need to learn more functions and libraries such as selenium or regular expression. It would be interesting to spend more time drilling into the challenges in web scraping.

However, if you are looking for some simple ways to do web scraping, Octoparse could be your solution. Octoparse is a powerful web scraping tool which could help you easily obtain information from websites. Check out this tutorial about how to scrape reviews from Yelp with Octoparse. Feel free to contact us when you need a powerful web-scraping tool for your business or project!

Author: Jiahao Wu

日本語記事:PythonによるWebスクレイピングを解説

Webスクレイピングについての記事は 公式サイトでも読むことができます。

Artículo en español: Web Scraping con Python: Guía Paso a Paso

También puede leer artículos de web scraping en el WebsOficiite al

It is a well-known fact that Python is one of the most popular programming languages for data mining and Web Scraping. There are tons of libraries and niche scrapers around the community, but we’d like to share the 5 most popular of them.

Most of these libraries' advantages can be received by using our API and some of these libraries can be used in stack with it.

The Top 5 Python Web Scraping Libraries in 2020#

1. Requests#

Well known library for most of the Python developers as a fundamental tool to get raw HTML data from web resources.

To install the library just execute the following PyPI command in your command prompt or Terminal:

After this you can check installation using REPL:

- Official docs URL: https://requests.readthedocs.io/en/latest/

- GitHub repository: https://github.com/psf/requests

2. LXML#

When we’re talking about the speed and parsing of the HTML we should keep in mind this great library called LXML. This is a real champion in HTML and XML parsing while Web Scraping, so the software based on LXML can be used for scraping of frequently-changing pages like gambling sites that provide odds for live events.

To install the library just execute the following PyPI command in your command prompt or Terminal:

The LXML Toolkit is a really powerful instrument and the whole functionality can’t be described in just a few words, so the following links might be very useful:

- Official docs URL: https://lxml.de/index.html#documentation

- GitHub repository: https://github.com/lxml/lxml/

3. BeautifulSoup#

Probably 80% of all the Python Web Scraping tutorials on the Internet uses the BeautifulSoup4 library as a simple tool for dealing with retrieved HTML in the most human-preferable way. Selectors, attributes, DOM-tree, and much more. The perfect choice for porting code to or from Javascript's Cheerio or jQuery.

To install this library just execute the following PyPI command in your command prompt or Terminal:

As it was mentioned before, there are a bunch of tutorials around the Internet about BeautifulSoup4 usage, so do not hesitate to Google it!

- Official docs URL: https://www.crummy.com/software/BeautifulSoup/bs4/doc/

- Launchpad repository: https://code.launchpad.net/~leonardr/beautifulsoup/bs4

4. Selenium#

Selenium is the most popular Web Driver that has a lot of wrappers suitable for most programming languages. Quality Assurance engineers, automation specialists, developers, data scientists - all of them at least once used this perfect tool. For the Web Scraping it’s like a Swiss Army knife - there are no additional libraries needed because any action can be performed with a browser like a real user: page opening, button click, form filling, Captcha resolving, and much more.

To install this library just execute the following PyPI command in your command prompt or Terminal:

The code below describes how easy Web Crawling can be started with using Selenium:

Using Python For Web Scraping In Excel

As this example only illustrates 1% of the Selenium power, we’d like to offer of following useful links:

- Official docs URL: https://selenium-python.readthedocs.io/

- GitHub repository: https://github.com/SeleniumHQ/selenium

5. Scrapy#

Scrapy is the greatest Web Scraping framework, and it was developed by a team with a lot of enterprise scraping experience. The software created on top of this library can be a crawler, scraper, and data extractor or even all this together.

Using Python For Web Scraping In Java

Creating A Web Scraper Python

To install this library just execute the following PyPI command in your command prompt or Terminal:

We definitely suggest you start with a tutorial to know more about this piece of gold: https://docs.scrapy.org/en/latest/intro/tutorial.html

As usual, the useful links are below:

- Official docs URL: https://docs.scrapy.org/en/latest/index.html

- GitHub repository: https://github.com/scrapy/scrapy

What web scraping library to use?#

So, it’s all up to you and up to the task you’re trying to resolve, but always remember to read the Privacy Policy and Terms of the site you’re scraping 😉.