In the Azure Storage Explorer application, select a container under a storage account. The main pane shows a list of the blobs in the selected container. To download blobs using Azure Storage Explorer, with a blob selected, select Download from the ribbon. A file dialog opens and provides you the ability to enter a file name. Azure Blob Storage helps you create data lakes for your analytics needs, and provides storage to build powerful cloud-native and mobile apps. Optimize costs with tiered storage for your long-term data, and flexibly scale up for high-performance computing and machine learning workloads. Azure Data Explorer is a fast and highly scalable data exploration service for log and telemetry data. Azure Data Explorer offers ingestion (data loading) from Event Hubs, IoT Hubs, and blobs written to blob containers.

- Microsoft Azure Tutorial

- Microsoft Azure Advanced

- Microsoft Azure Useful Resources

- Selected Reading

Let us first understand what a Blob is. The word ‘Blob’ expands to Binary Large OBject. Blobs include images, text files, videos and audios. There are three types of blobs in the service offered by Windows Azure namely block, append and page blobs.

Block blobs are collection of individual blocks with unique block ID. The block blobs allow the users to upload large amount of data.

Append blobs are optimized blocks that helps in making the operations efficient.

Page blobs are compilation of pages. They allow random read and write operations. While creating a blob, if the type is not specified they are set to block type by default.

All the blobs must be inside a container in your storage. Here is how to create a container in Azure storage.

Create a Container

Step 1 − Go to Azure portal and then in your storage account.

Step 2 − Create a container by clicking ‘Create new container’ as shown in following image.

There are three options in the Access dropdown which sets the permission of who can access the blobs. ‘Private’ option will let only the account owner to access it. ‘Public Container’ will allow anonymous access to all the contents of that container. ‘Public blob’ option will set open access to blob but won’t allow access to the container.

Upload a Blob using PowerShell

Step 1 − Go to ‘Windows PowerShell’ in the taskbar and right-click. Choose ‘Run ISE as Administrator’.

Step 2 − Following command will let you access your account. You have to change the fields highlighted in all the commands.

Step 3 − Run the following command. This will get you the details of you Azure account. This will make sure that your subscription is all set.

Step 4 − Run the following command to upload your file.

Step 5 − To check if the file is uploaded, run the following command.

Download a Blob

Step 1 − Set the directory where you want to download the file.

Step 2 − Download it.

Remember the following −

All command names and file names are case sensitive.

Commands should be in one line or should be continued in the next line by appending ` in the preceding line (`is continuation character in PowerShell)

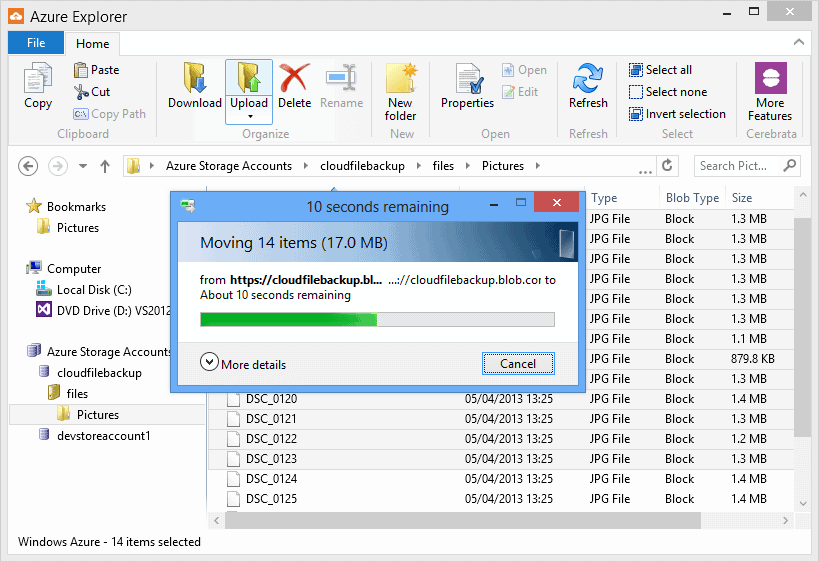

Manage Blobs using Azure Storage Explorer

Managing blobs is pretty simple using ‘Azure Storage Explorer’ interface as it is just like Windows files and folder explorer. You can create a new container, upload blobs, see them in a listed format, and download them. Moreover, you can copy them to a secondary location in a very simple manner with this interface. The following image makes the process clear. As can be seen, once an account is added, we can select it from the dropdown and get going. It makes operating Azure storage very easy.

Last summer Microsoft has rebranded the Azure Kusto Query engine as Azure Data Explorer. While it does not support fully elastic scaling, it at least allows to scale up and out a cluster via an API or the Azure portal to adapt to different workloads. It also offers parquet support out of the box which made me spend some time to look into it.

Azure Data Explorer

With the heavy use of Apache Parquet datasetswithin my team at Blue Yonder we are always looking for managed, scalable/elastic queryengines on flat files beside the usual suspects like drill, hive, presto orimpala.

For the following tests I deployed a Azure Data Explorer cluster with two instances ofStandard_D14_v2 servers with each 16 vCores, 112 GiB ram, 800 GiB SSD storage and a network bandwidth class extremely high (which corresponds to 8 NICs).

Data Preparation NY Taxi Dataset

Like in the understanding parquet predicate pushdown blog post we are using the NY Taxi dataset for the tests because it has a reasonable size and some nice properties like different datatypes and includes some messy data (like all real world data engineering problems).

We can convert the csv files to parquet with pandas and pyarrow:

Each csv file has about 700MiB, the parquet files about 180MiB and per file about 10 million rows.

Data Ingestion

The Azure Data Explorer supports control and query commands to interactwith the cluster. Kusto control commands always startwith a dot and are used to manage the service, query information about it andexplore, create and alter tables. The primary query language is the kusto query language, but a subset of T-SQL is also supported.

The table schema definition supports a number of scalar data types:

| Type | Storage Type (internal name) |

|---|---|

| bool | I8 |

| datetime | DateTime |

| guid | UniqueId |

| int | I32 |

| long | I64 |

| real | R64 |

| string | StringBuffer |

| timespan | TimeSpan |

| decimal | Decimal |

To create a table for the NY Taxi dataset we can use the following control command with the table and columns names and the corresponding data types:

Ingest data into the Azure Data Explorer

The .ingest into table command can read the data from an Azure Blob or Azure Data Lake Storage and import the data into the cluster. This means it is ingesting the data and stores it locally for a better performance. Authentication is done with Azure SaS Tokens.

Importing one month of csv data takes about 110 seconds. As a reference parsing the same csv file with pandas.read_csv takes about 19 seconds.

One should always use of obfuscated strings (the h in front of the string values) to ensure that the SaS Token is never recorded or logged:

Ingesting parquet data from the azure blob storage uses the similar command, and determines the different file format from the file extension. Beside csv and parquet quite some more data formats like json, jsonlines, ocr and avro are supported. According to the documentation it is also possible to specify the format by appending with (format='parquet').

Loading the data from parquet only took 30s and already gives us a nice speedup. One can also use multiple parquet files in the blob store to load the data in one run, but I did not get a performance improvement (e.g better than duration times number files, which I interpret that there is no parallel import happening):

Azure Data Explorer

Once the data is ingested on can nicely query it using the Azure Data explorer either in the Kusto query language or in T-SQL:

Query External Tables

Loading the data into the cluster gives best performance, but often one just wants to do an ad hoc query on parquet data in the blob storage. Using external tables supports exactly this scenario. And this time using multiple files/partitioning helped to speed up the query.

Querying external data looks similar but has the benefit that one does not have to load the data into the cluster. In a follow up post I'll do some performance benchmarks. Based on my first experiences it seems like the query engine is aware of some of the parquet properties like columnar storage and predicate pushdown, because queries return results faster than loading the full data from the blob storage (with the 30mb/s limit) would take.

Azure Blob Explorer Linux

Export Data

Azure Storage Explorer Blob Containers Loading

For the for the sake of completeness I'll just show an example how to export data fromthe cluster back to a parquet file in the azure lob storage: